Anthropic’s Claude Opus 4.6 Claims Top Spot in AI Rankings, Beating OpenAI and Google

Anthropic is on a roll: First, the AI startup, a spinoff from OpenAI, shook up the SaaS sector quite a bit with Claude Cowork, then followed up with its latest AI model Claude Opus 4.6. Following the launch last Thursday, it can already be said today: Anthropic’s newest LLM can position itself at the top of the rankings and beat competitors like Google’s Gemini.

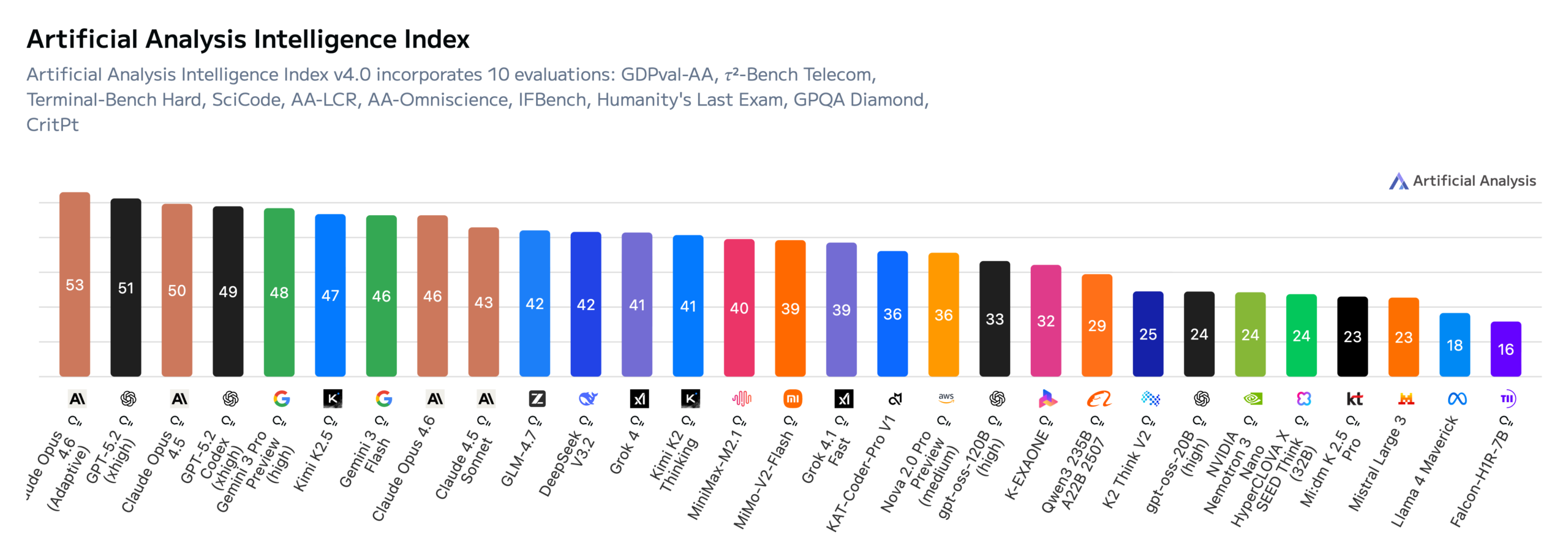

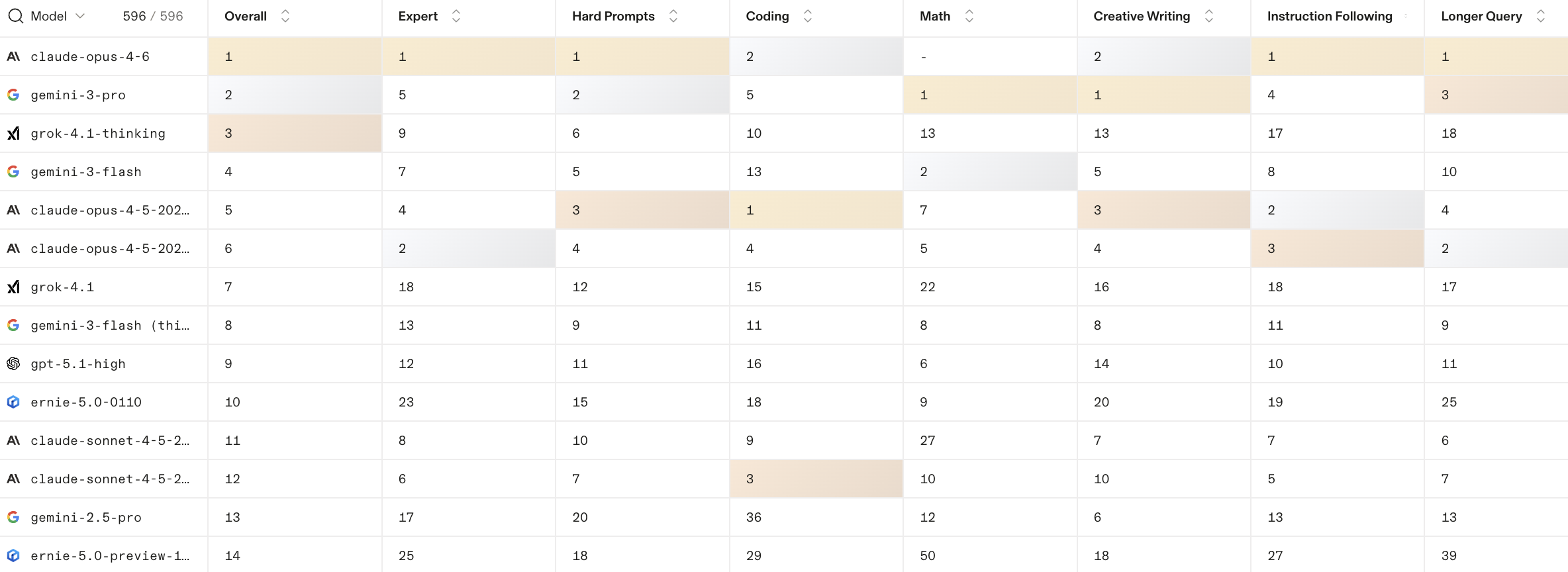

Claude Opus 4.6 is already in first place in the Artificial Analysis ranking, ahead of OpenAI’s GPT-5.2 and its own predecessor Claude Opus 4.5. At Artificial Analysis, AI models are compared based on different benchmarks, including the well-known “Humanity’s Last Exam”. Anthropic scores particularly well in the AI agents category. Interestingly: In coding, OpenAI’s GPT-5.2 is currently leading – actually Anthropic’s domain.

Claude Opus 4.6 not only ranks ahead of competitors’ AI models in these tests, but also among users of Arena.ai. As reported multiple times, users rate the results of AI models in a comparative blind test, from which rankings are created. The new LLM ranks 1st in the overall rating and scores points in subcategories like writing, coding, or instruction following. Since Anthropic (still) doesn’t have AI models in the image or video domains, these areas are still dominated by other providers.

Good, but also expensive

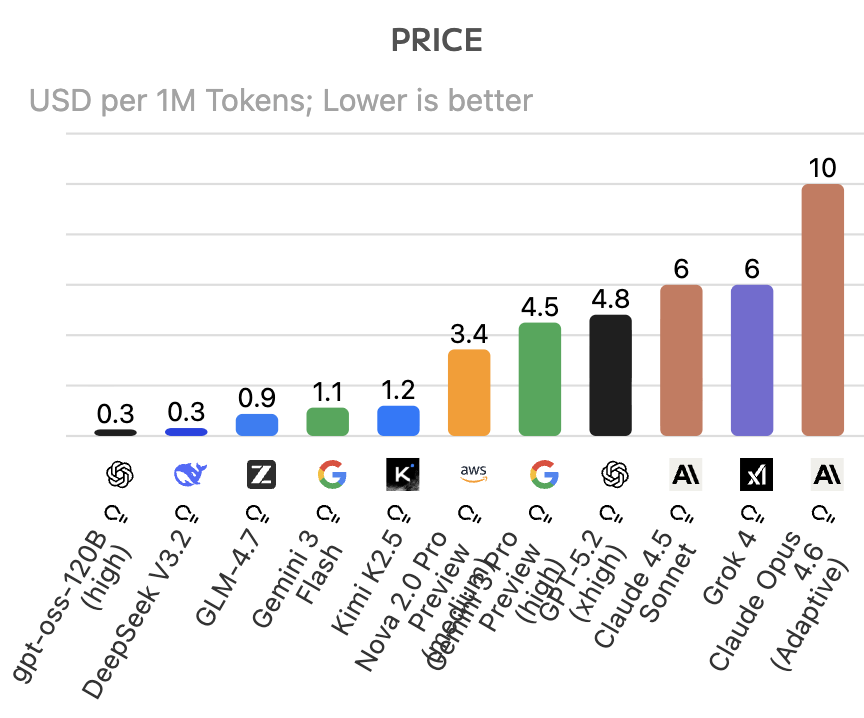

Quality comes at a price: Claude Opus 4.6 is currently also the most expensive AI model, as Artificial Analysis shows. This means: Anyone who integrates the LLM into their apps via API pays significantly more for inputs and outputs than competitors like OpenAI or Google.

Pricing remains unchanged compared to the predecessor model at $5 per million input tokens and $25 per million output tokens. For inputs exceeding 200,000 tokens, premium pricing applies at $10 for input tokens and $37.50 for output tokens.